What is MCP Server? Why the hype ?

LLMs connecting to tools isn’t new. We’ve seen it with LangChain, plugins, and more. So what’s different this time?

Overview

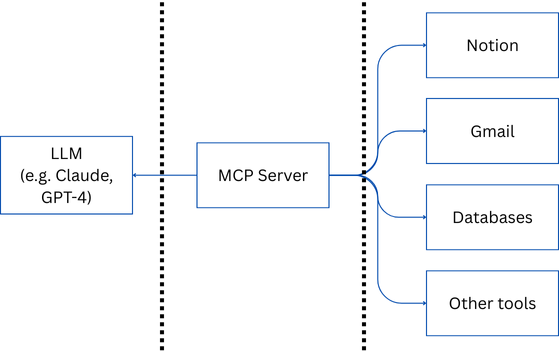

The Model Context Protocol (MCP) introduces a standardised, model-agnostic layer that lets large language models (LLMs) seamlessly discover and invoke external tools and data sources. Rather than bespoke “glue code” for each new integration, MCP servers expose a uniform interface like a “USB-C port for AI”.

What Is an MCP Server?

An MCP server is simply a service that exposes one or more “tools” (e.g., email senders, database queries, file readers) under the Model Context Protocol specification. Any MCP-capable LLM client, be it Claude, GPT-4, or an open-source alternative, can dynamically discover available tools and invoke them over STDIO or HTTP/SSE transports

- Tools become services: Gmail, Notion, SQL databases, or Zapier workflows can each run behind their own MCP server.

- Layer of indirection: The LLM speaks only MCP. The server handles API keys, authentication, sandboxing, and actual API calls.

How MCP Works in Practice

- Server Registration

You run, for example, an n8n-based MCP server that wraps a suite of workflows (sending email, querying CRM data, etc.) - Client Discovery

When Claude (the LLM) starts, it queries the MCP server’s/toolsendpoint to list what actions are available. - Invocation

Upon user request (“Send an order confirmation email”), Claude sends a structured MCP request. The server executes the corresponding action to send the email, like SMTP action, and returns success/failure. - Data Retrieval

For “Fetch my latest support tickets”, the LLM invokes the MCP server’s database tool, which runs a secure SQL query and streams results back

Why the Hype?

- True Standardization

Before MCP, each LLM-agent framework (LangChain, custom orchestrators) needed bespoke connectors for every API, an N×M integration nightmare. MCP replaces that with one discovery protocol and one invocation format for all tools. - Model-Agnostic Plug-and-Play

Any LLM that supports MCP gains instant access to thousands of tools no per-model engineering required. OpenAI added MCP to its Agents SDK and ChatGPT desktop in March 2025 while Google DeepMind followed in April 2025. - Ecosystem Momentum

Within months of open-sourcing in November 2024, major toolmakers (Sourcegraph, Atlassian, Block) and IDEs (Zed, Replit) stood up MCP servers, citing easier maintenance and security boundaries. - Enhanced Security & Isolation

Because MCP servers mediate all calls, enterprises can enforce permission checks, logging, and Docker-based sandboxing uniformly — something ad-hoc integrations often lacked.

Have We Not Had This Already?

LLMs could indeed invoke external APIs before MCP. LangChain, LlamaIndex, and custom plugins have long enabled LLMs to connect with tool workflows. However:

- Static vs. Dynamic: Earlier integrations required redeploying or reconfiguring agents to add new tools. MCP clients can dynamically discover servers at runtime.

- Diverse Transports: MCP standardises both local STDIO and remote HTTP/SSE, freeing developers from rolling custom IPC or REST conventions.

- Unified Schema: Instead of dozens of JSON schemas, MCP uses a single request/response format for all tools, streamlining error handling and logging

Conclusion

An MCP server isn’t a new capability for LLMs per se, but rather the missing architectural layer that turns myriad bespoke integrations into a coherent, open ecosystem. By treating tools as discoverable, standardised services, MCP dramatically reduces engineering friction, improves security posture, and accelerates the development of rich, agentic AI applications. That shift from ad-hoc connectors to a universal protocol drives the hype.